I am currently working on a project were we are collecting huge amount of data. Recently we discovered that millions of rows were not unique. Because we are using Machine Learning to find patterns in the data and to cluster it, these dublicate rows were polluting the results of the learning algoritme. Therefor we had to come up with a solution to remove all dublicates and prevent new dublicates in the future.

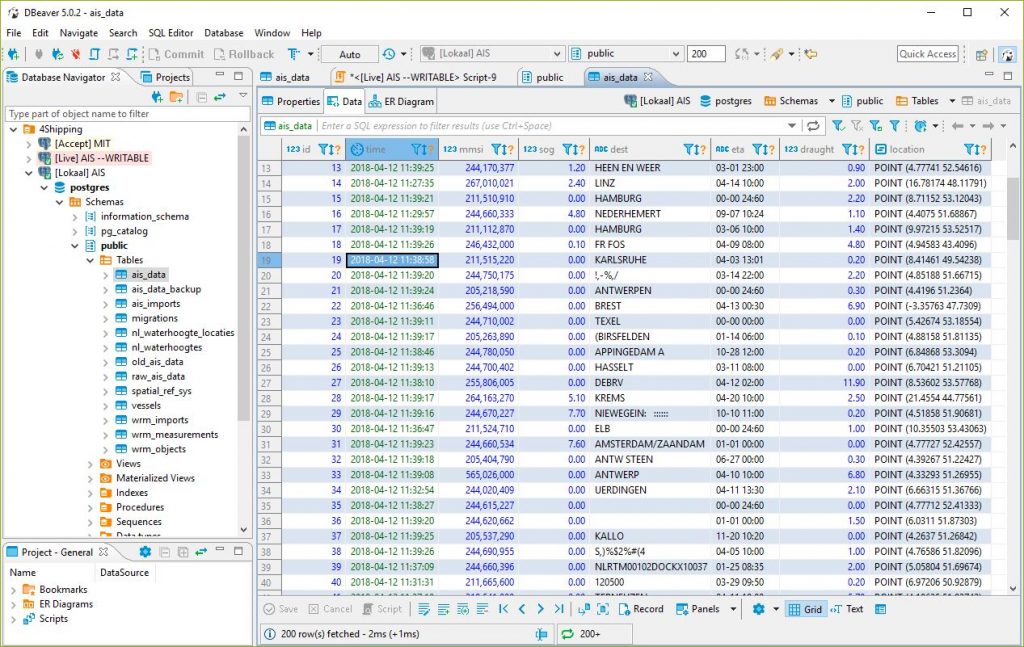

Figure 1: DBeaver | Free Universal SQL Client

My favorite PostgreSQL client

Before we start, I recently discovered that DBeaver is an excellent and free to use Universal SQL Client. Before I used HeidiSQL. Another excellent free SQL client but not that good with PostgreSQL database. In my case it crashed or showed unexpected behaviour with PostgreSQL. So if you work alot with PostgreSQL and looking for a free SQL client take a look at this one.

Lets make the data unique

The most simple solution, creating a query that finds all dublicates and deletes them is not suitable for such huge amount of data. It will take days before such query gives results if you dont run out of memory before that happens. For small tables its faster to use the DELETE approach. For us the only way to undublicate all results was to create a new table, add a unique constraint on this table, and insert all data from the original table into the new table. Ofcourse these inserts will fail as soon as it tries to insert a not unique value. Lucky for us PostgreSQL supports just like MySQL a way to ignore these inserts.

For small tables the DELETE approach is faster then the INSERT approach. But for large tables as in our situation its much slower!

Below you see the queries we used to undublicate the table of AIS data.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

-- First create a copy of the original database structure CREATE TABLE ais_data_unique ( LIKE ais_data INCLUDING DEFAULTS INCLUDING CONSTRAINTS INCLUDING INDEXES ); -- Now add new constraints ALTER TABLE ais_data_unique ADD UNIQUE(mmsi, "time"); -- Next insert all rows from source table into new table -- Ignore inserts that fail (dublicates) INSERT INTO ais_data_unique (SELECT * FROM ais_data) ON CONFLICT DO NOTHING; -- Rename source table and unique table ALTER TABLE ais_data RENAME TO old_ais_data; ALTER TABLE ais_data_unique RENAME TO ais_data; -- After comparing results drop source table DROP TABLE old_ais_data; |

Is it always the fastest way?

The above table had about 1 billion rows. It took about 3-4 hours to remove all dublicates this way. It was also a challenge to find out how much rows it had exactly. Normal count operations on such huge tables take forever. Therefor I used the following query to find out the total amount of rows:

|

1 2 3 |

SELECT reltuples::bigint AS estimate FROM pg_class WHERE oid = 'ais_data'::regclass; |

For completeness I took a subset of the above table (approximately 1 million rows). The above method took about 50 seconds for a much smaller table. I also tried the DELETE approach below:

|

1 2 3 4 5 6 7 8 |

delete from ais_data where id in ( SELECT A.id FROM ais_data A INNER JOIN (SELECT "time", mmsi FROM ais_data GROUP BY "time", mmsi HAVING COUNT(*) > 1) B ON A."time" = B."time" AND A.mmsi = B.mmsi) |

This took about 6 seconds and was therefor much faster. Likely this is because the indexes on the table are now more significant in the total amount of time.